Today, for a good time, I applied my Reader Toolbox to the latest issue of ITAL for the two-fold purposes of: 1) just seing whether the Toolbox could function, and 2) determine the degree I could extract meaningful themes from the issue. Well, the Toolbox functioned, in that it did not crash nor output invalid data, and I do believe I could pull out themes, in that each issue's authors wrote about something distinctive, and I could identify those things. Below describes my process.

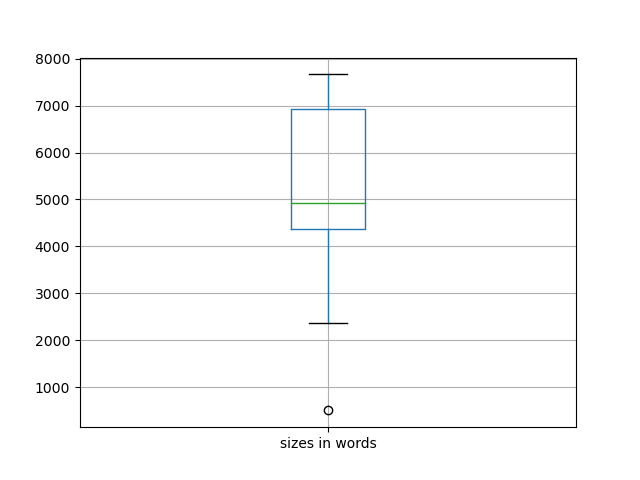

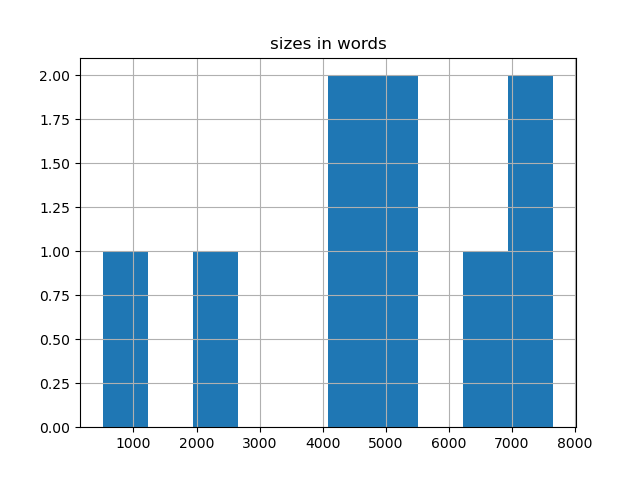

I began by tediously downloading the PDF version of each article as they were linked from the issue's home page. I saved each file with a name in a form similar to author-title-date.pdf. An example includes: ridley-explainable-2022.pdf. I then used the Toolbox's "build" command to convert the PDF files into a data set amenable to computation. When that process was done, I summarized the result using the Toolbox's "summarize" command. The result is everything below the "Basic characteristics" header and it outlines things akin to rudimentary descriptive statistical information about the issue.

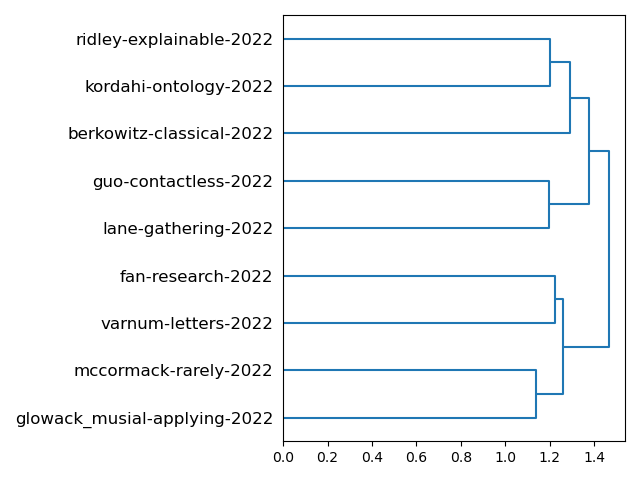

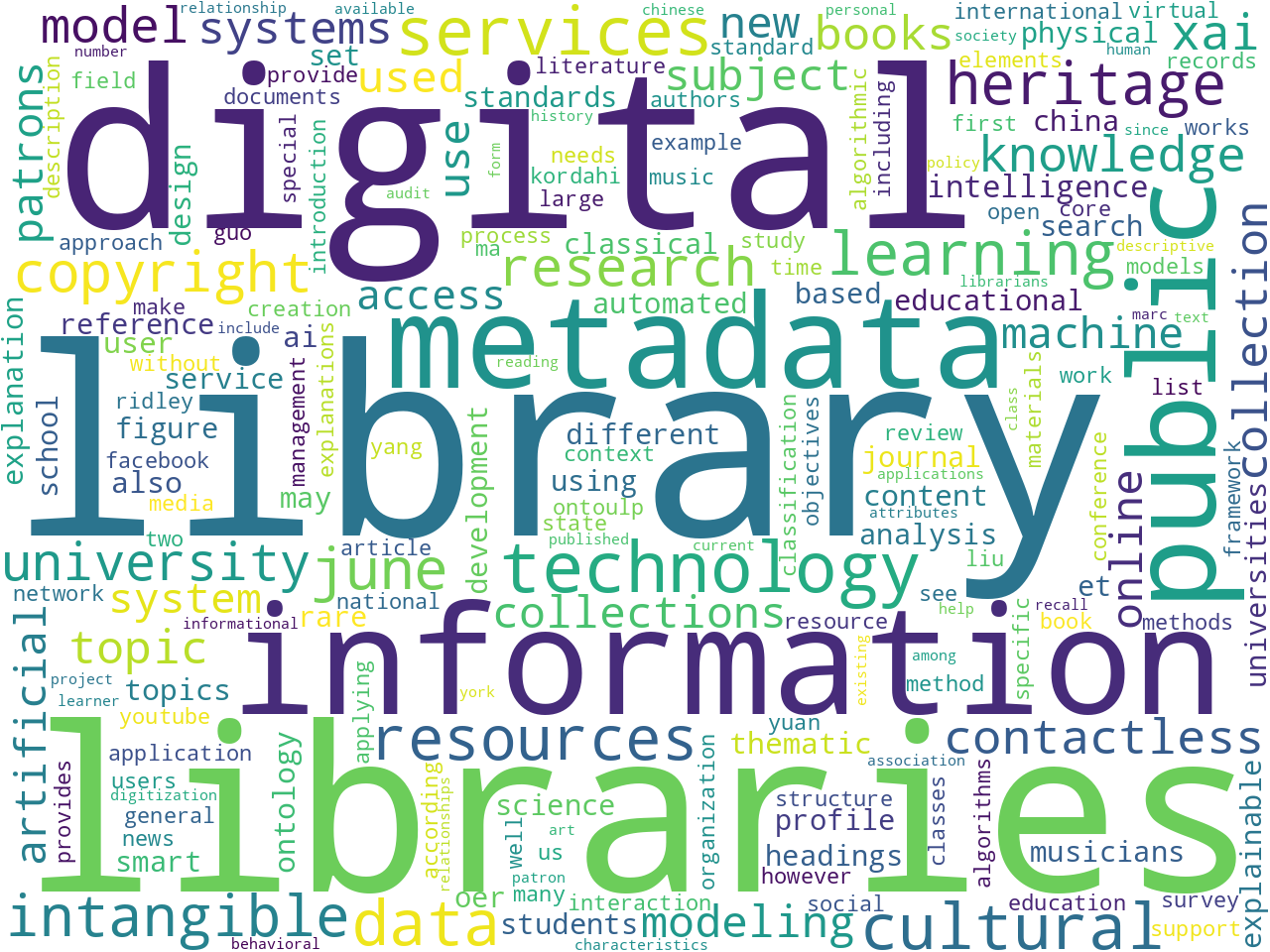

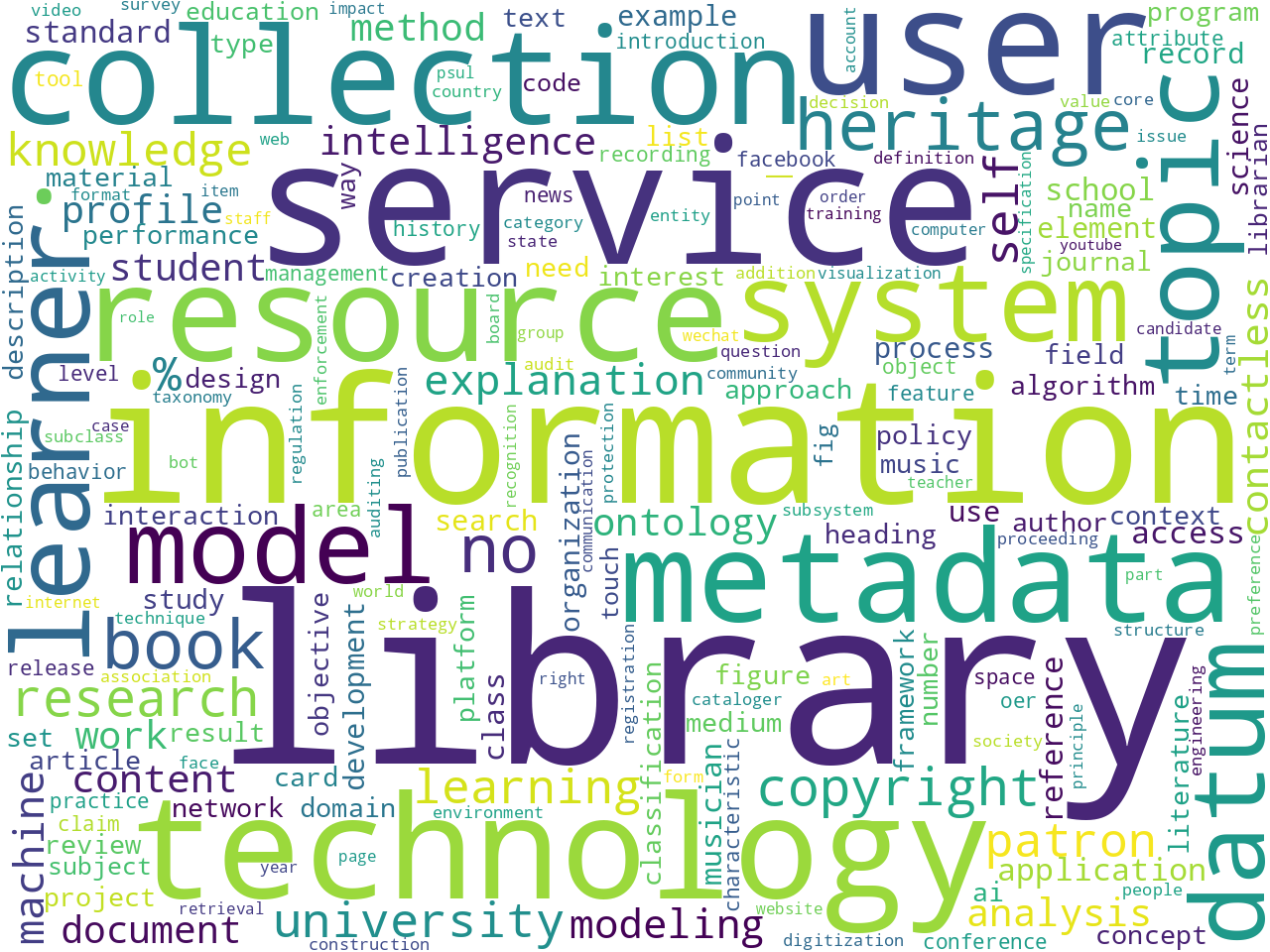

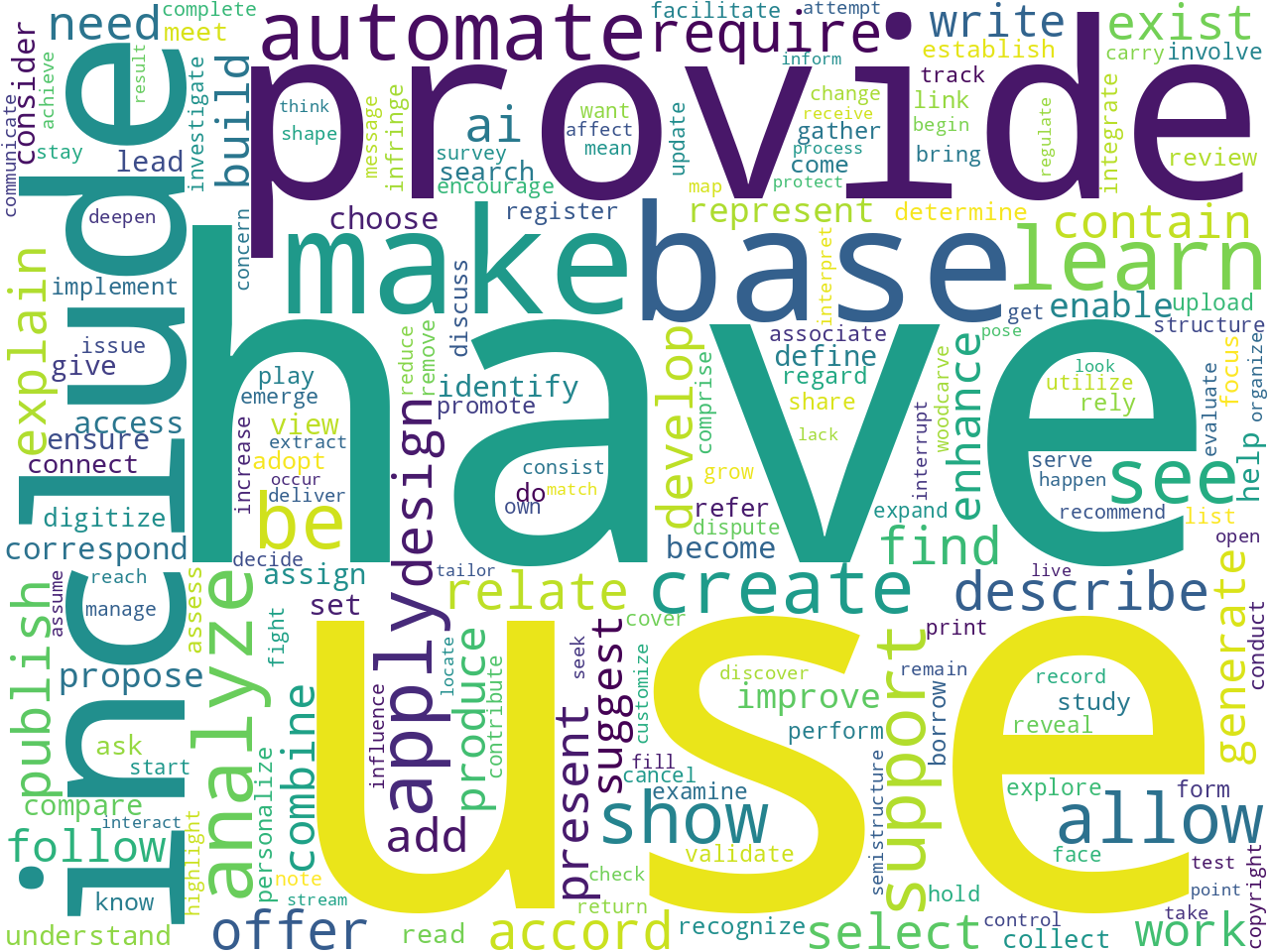

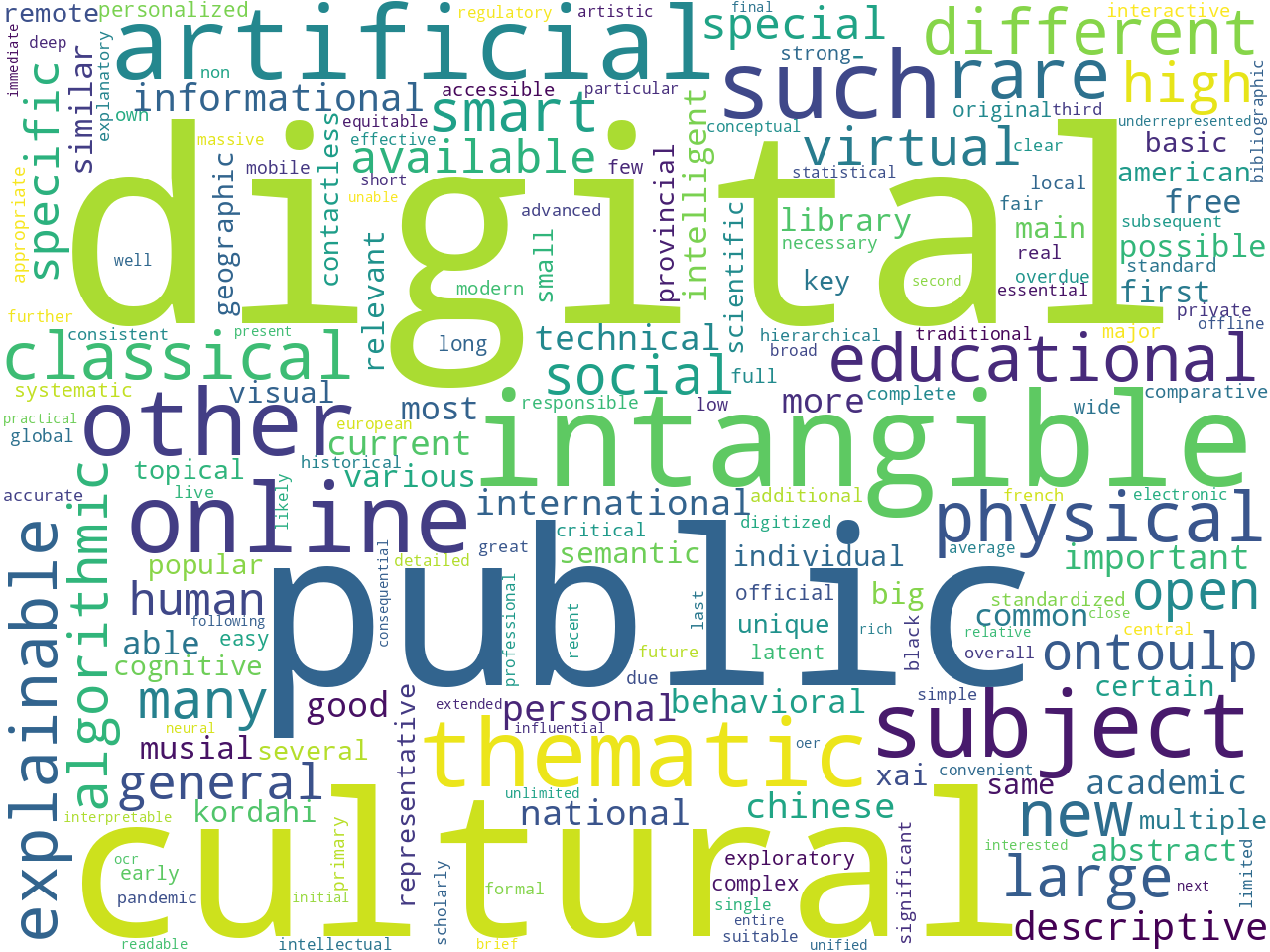

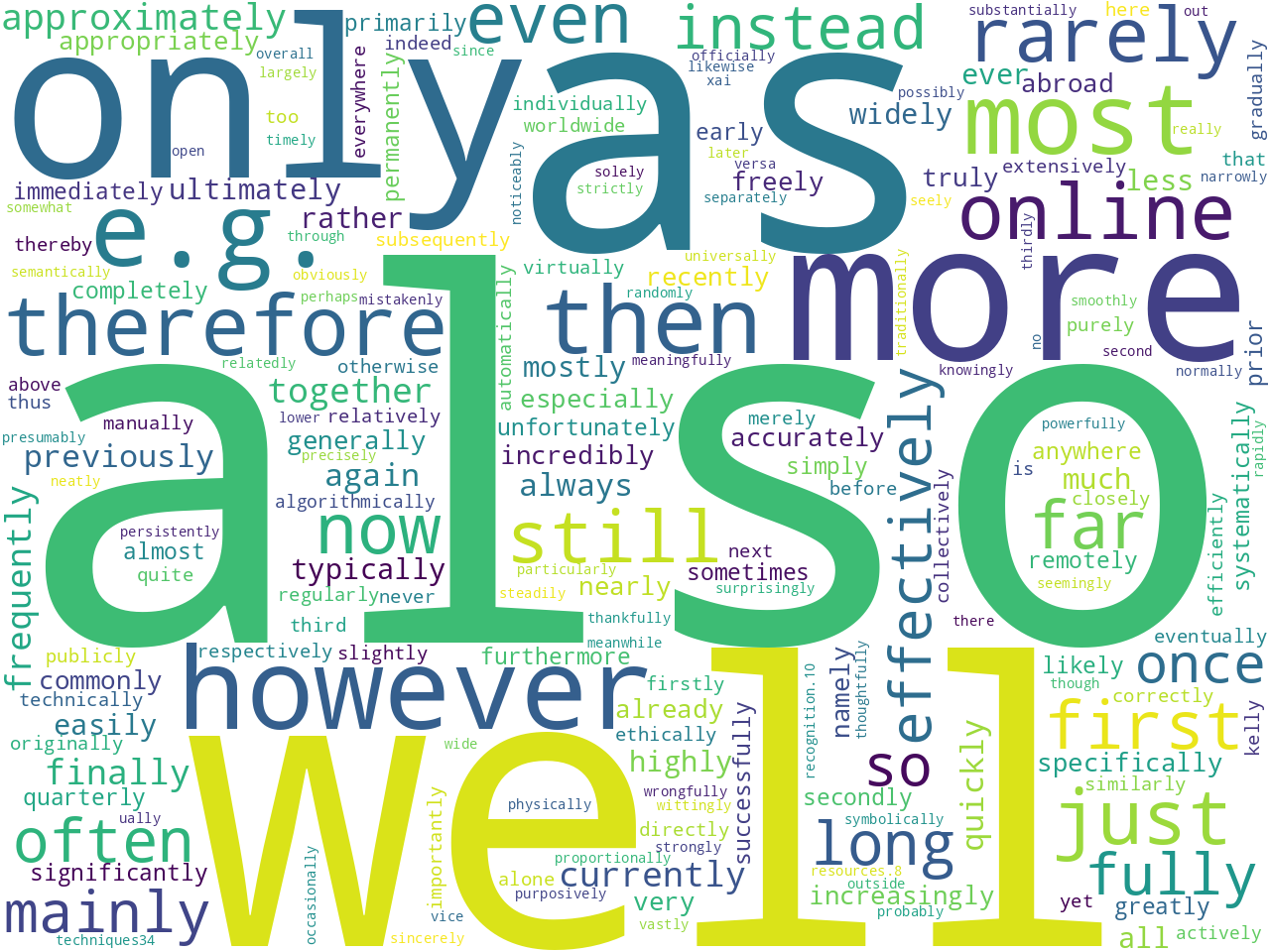

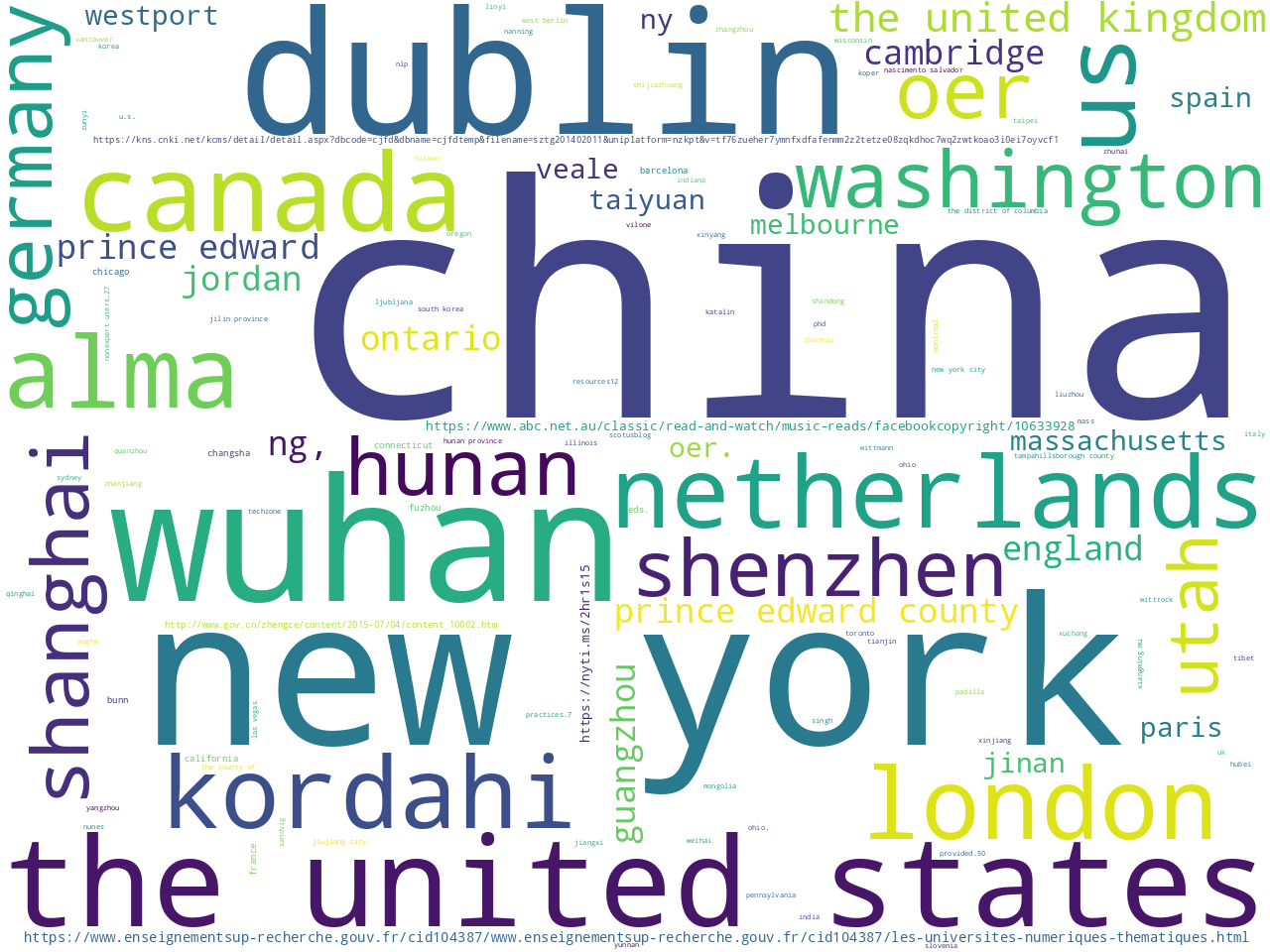

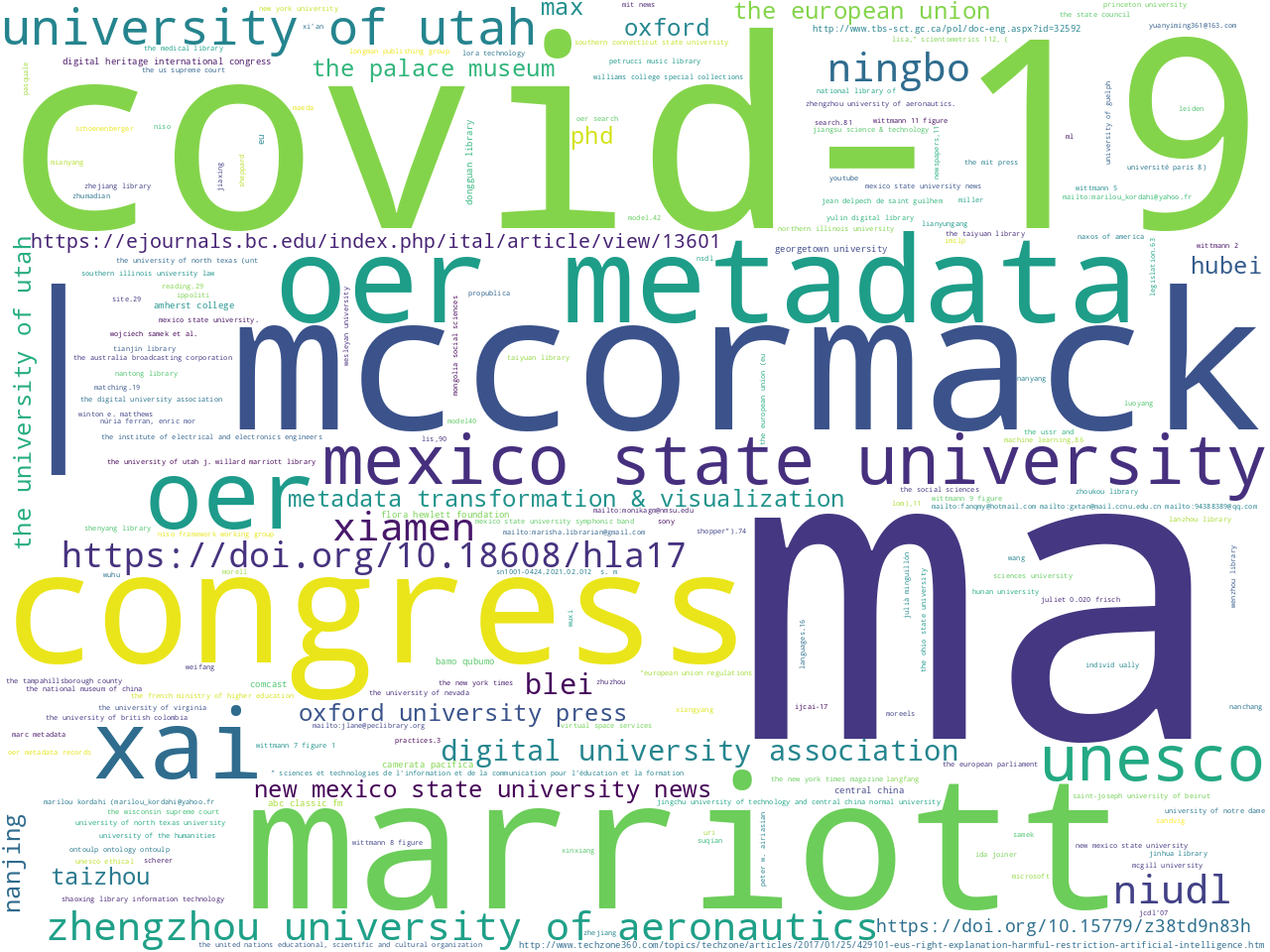

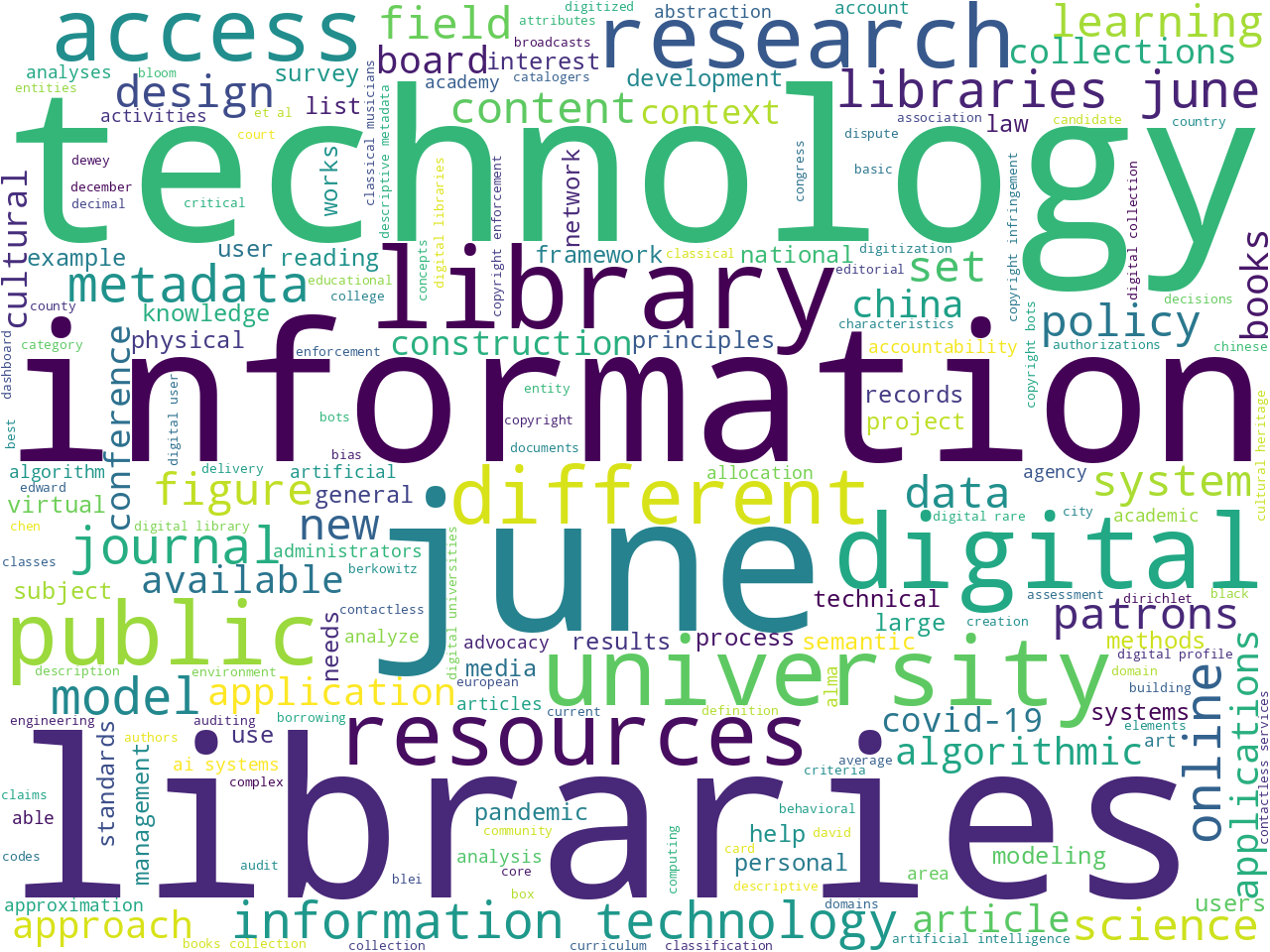

I then asked the question, "What did the authors write about?" To answer this question I first assumed each author wrote about something different than the others. After all, why would the authors all wrote about the samething? Thus, using the "tm" command, I topic modeled the corpus using nine topics because there were nine authors. The result is the following list of topic labels, weights, and qualifying keywords:

labels weights features

libraries 0.41118 libraries information technology june library ...

library 0.13684 library students school public access board co...

services 0.07472 library services libraries public contactless ...

digital 0.06427 digital collection books collections rare libr...

user-learner 0.03948 digital user-learner universities oer thematic...

metadata 0.03845 metadata cultural heritage intangible resource...

topic 0.03520 topic modeling subject headings topics digital...

copyright 0.03381 copyright classical musicians youtube music fa...

xai 0.03213 xai intelligence artificial machine learning s...

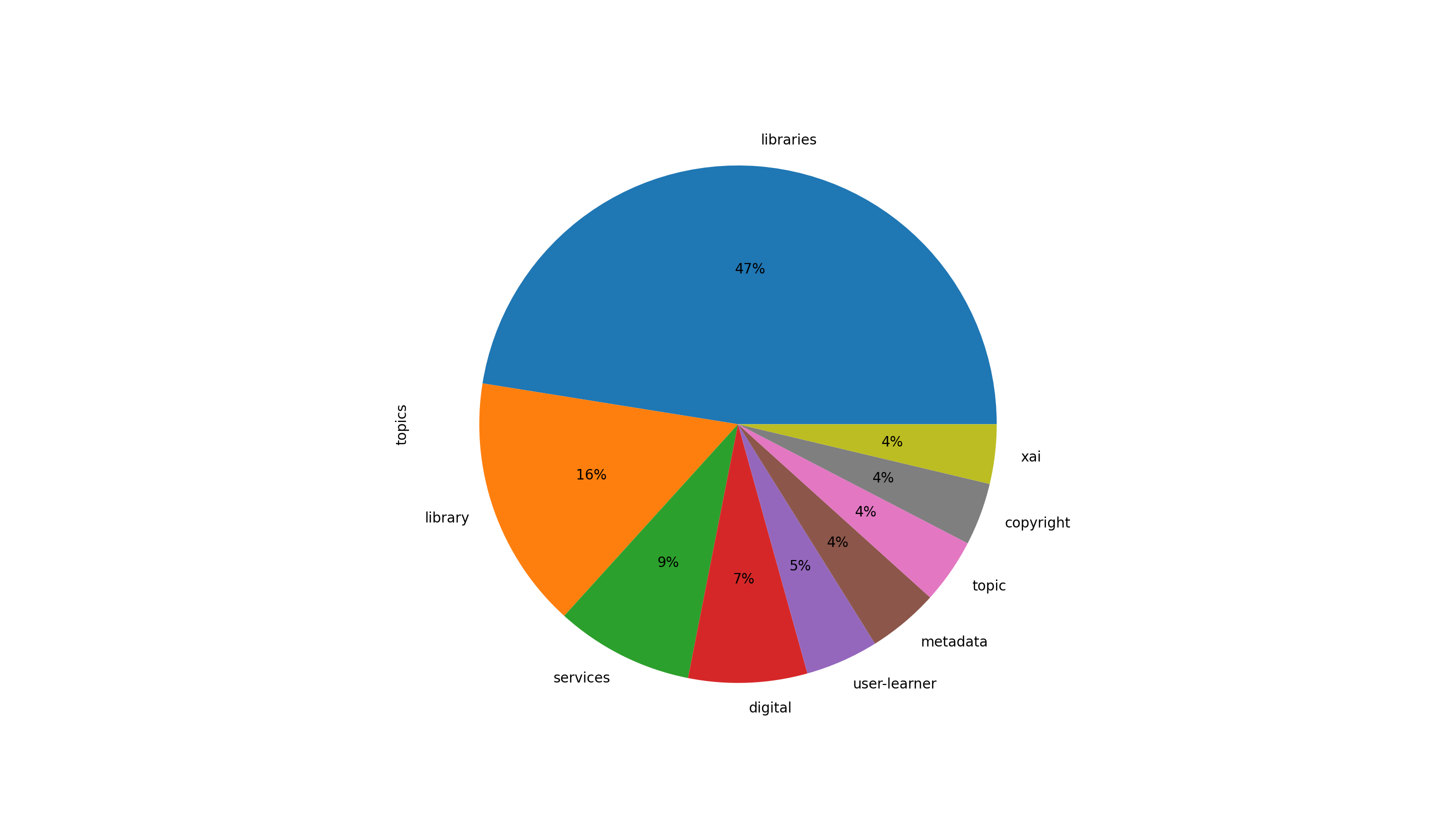

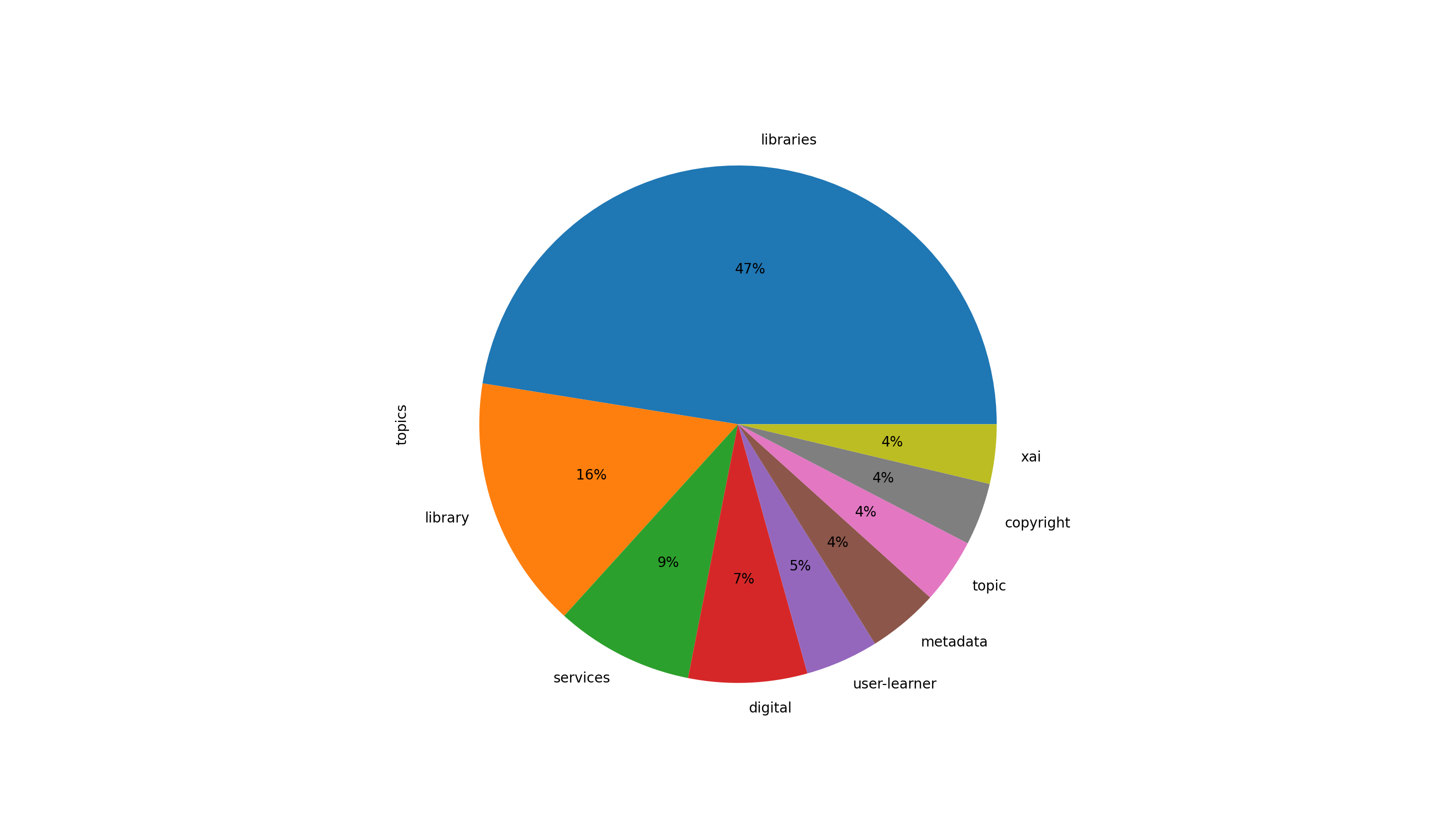

It is informative to visualize this result as a pie chart in an effort to determine the degree each topic is a part of the whole. From the result you literally see that the topics of "library" and "libraries" consume almost two-thirds of the issue, which makes perfect sense:

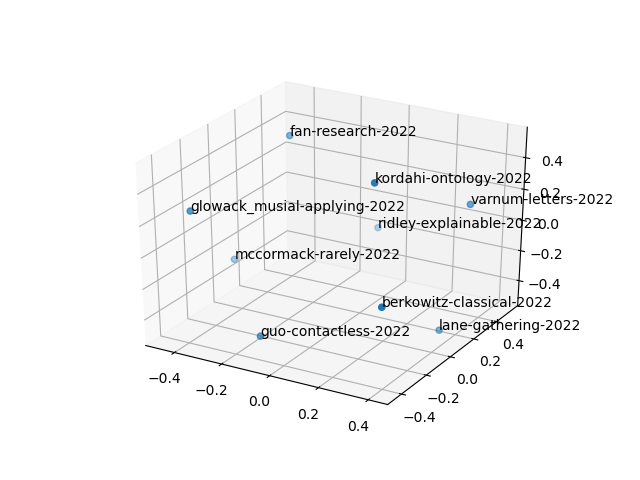

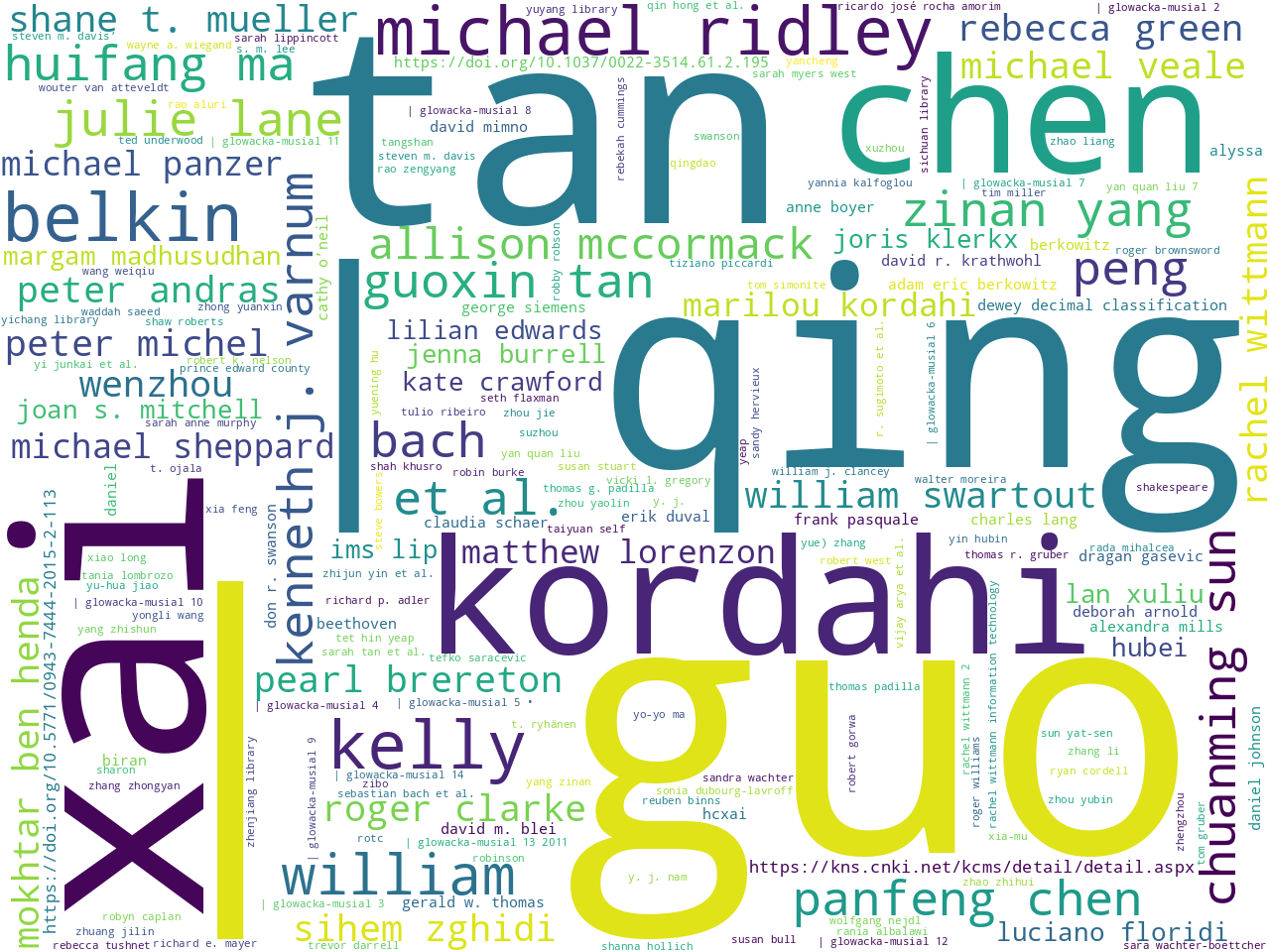

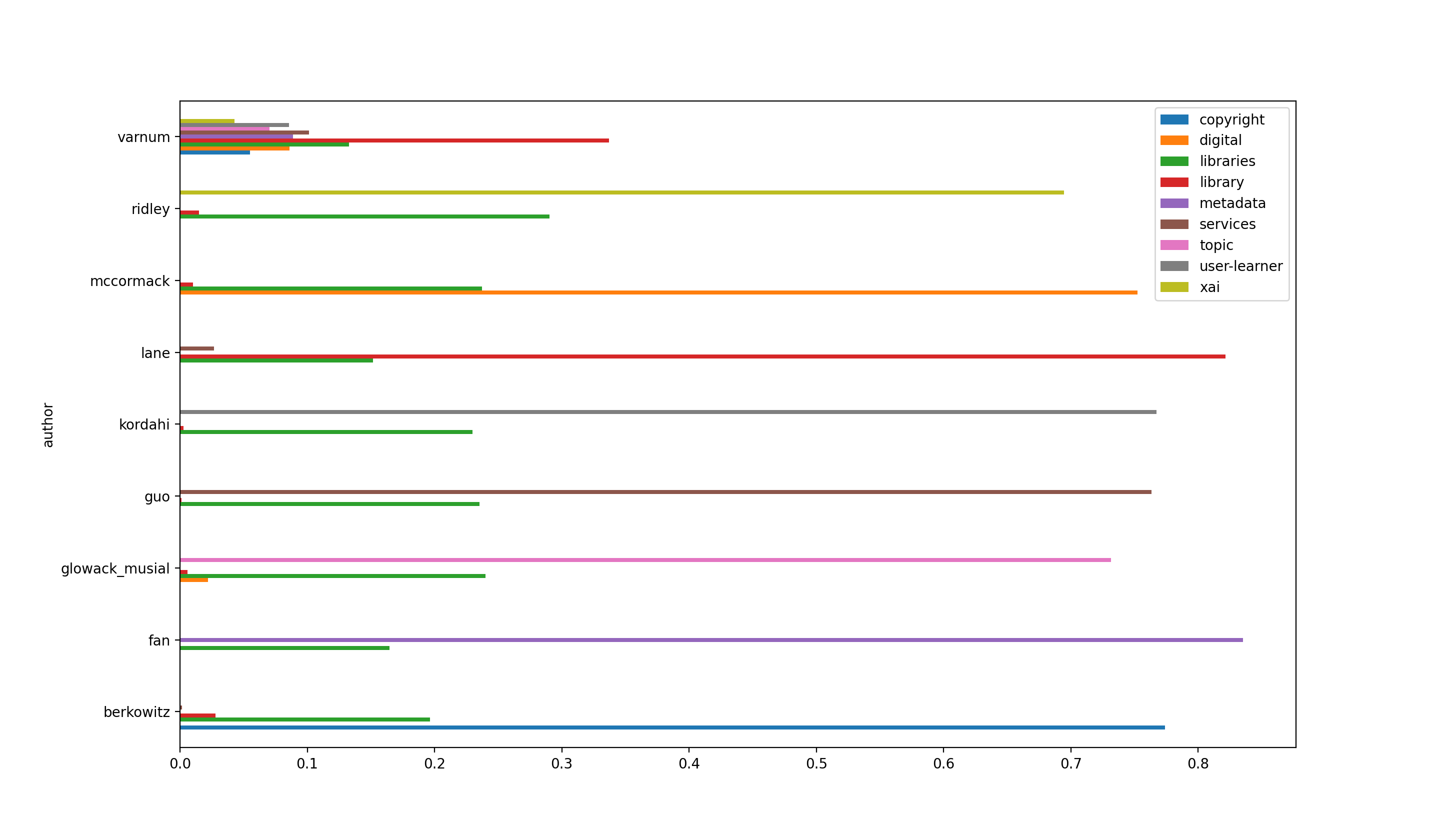

It is more informative to supplement the topic model with author names, pivot the resulting table, and visualize the result to illustrate the degree each author wrote about each topic. As yo can see, each author's topic is dominated by a single label, except Varnum's who is probably introducing each of the articles. Notice also how each author also talks about "libraries":

As a reality check, I took a gander at the computed bibliography generated by the Toolbox, and I compared it to the topics. While I am biased, the computed summary and computed keywords seem to jive the the labels generated by the topic modeling process. This makes me believe my modeling process is at least somewhat valid.

Of all the labels, the label "xai" intrigued me the most. What is "xai"? To answer the question, I applied a the Toolbox's "concordance" function by querying the corpus for "xai is". The result is below. While not necessarily complete, the result helped me understand that xai has something to do with artificial intelligence:

ntegral part of academic libraries . xai is a set of techniques , processes , an d how the field might influence it.5 xai is a critical lens through which to vie contemporary information systems and xai is a tool set for critical assessment a blic policy advocacy . what is xai ? xai is plagued by definitional problems.8 s scope of the field . the purpose of xai is for ai systems to have “ the ability ificially intelligent partners. ” 13 xai is needed to : 1. generate trust , tran g within a public policy framework . xai is not a new consideration . explainabi he model . another way to categorize xai is as proofs , validations , and author mmunication . xai as discovery while xai is primarily a means to validate and au ne learning systems , another use of xai is gaining attention . since xai can fi cterises the presence of trust. ” 85 xai is an essential tool to build that trus orms of algorithmic accountability . xai is a dual opportunity for libraries . o

Simply concordancing for the term "xai" is even more informative, but the results are lengthy. So the results have been cached locally.

So, what did I learn? I learned two things. First, rather selfishly, I learned that the Toolbox functions as designed. To me, that is a good thing. Second, I garnered quite a number of insights regarding the whole issue of a given scholarly journal. I pulled out themes that the reading of titles and abstracts may not have afforded me. I was also able to enumerate details of the issue that sparked questions in me, and I was able to follow-up on those questions.

Finally, all of the data and analysis run against it has been compressed into a single file for downloading. Given the Toolbox, you can do your own analysis in order to model the data in a different way and discover additional insights. Good luck, sincerely.

P.S. It took me about thirty minutes collect the data, create the data set, and do the analysis. It took me almost ninety minutes to actually write up what I learned.

Eric Lease Morgan <emorgan@nd.edu>

University of Notre Dame

Date created: June 22, 2022

Date updated: May 30, 2024

| Creator | eric |

| Date created | 2022-06-20 |

| Number of items | 9 |

| Number of words | 43348 |

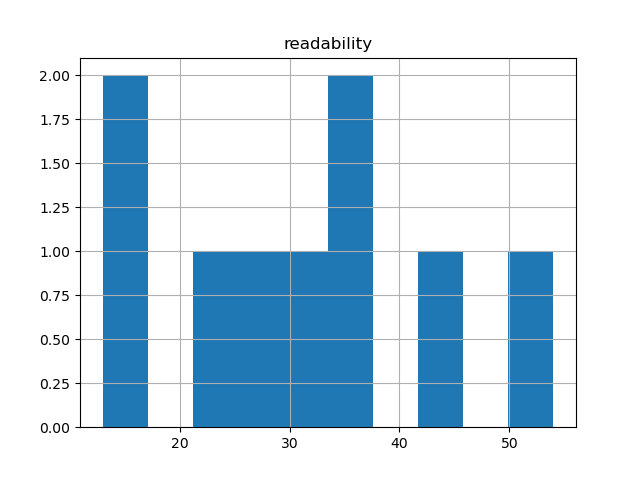

| Average readability score | 32 |

| Bibliography | plain text; HTML; JSON |